How Would Catastrophic Risks Affect Prospects for Compromise?

First written: 24 Feb. 2013; major updates: 13 Nov. 2013; last update: 4 Dec. 2017

Summary

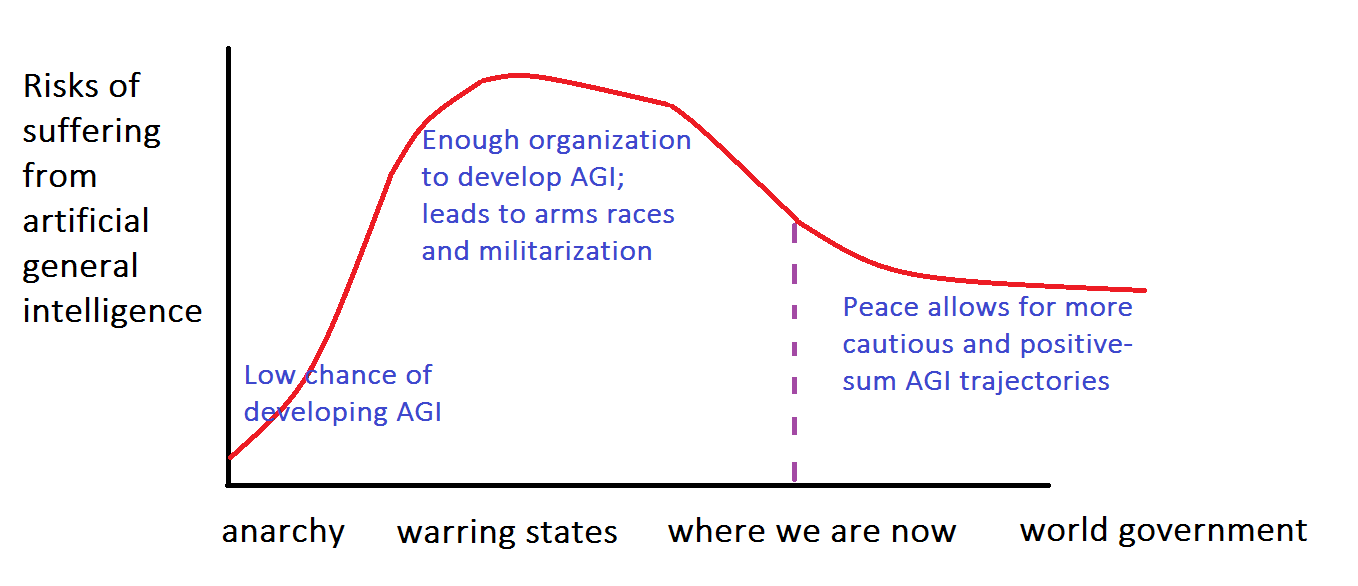

Catastrophic risks -- such as engineered pathogens, nanotech weapons, nuclear war, or financial collapse -- would cause major damage in the short run, but their effects on the long-run direction that humanity takes are also significant. In particular, to the extent these disasters increase risks of war, they may contribute to faster races between nations to build artificial general intelligence (AGI), less opportunity for compromise, and hence less of what everyone wants in expectation, including less suffering reduction. In this way, even pure negative utilitarians may oppose catastrophic risks, though this question is quite unsettled. While far from ideal, today's political environment is more democratic and peaceful than what we've seen historically and what could have been the case, and disrupting this trajectory might have more downside than upside. I discuss further considerations about how catastrophes could have negative and positive consequences. Even if averting catastrophic risks is net good to do, I see it as less useful than directly promoting compromise scenarios for AGI and setting the stage for such compromise via cooperative political, social, and cultural institutions.

Note, 20 Jul. 2015: Relative to when I first wrote this piece, I'm now less hopeful that catastrophic-risk reduction is plausibly good for pure negative utilitarians. The main reason is that some catastrophic risks, such as from malicious biotech, do seem to pose nontrivial risk of causing complete extinction relative to their probability of merely causing mayhem and conflict. So I now don't support efforts to reduce non-AGI "existential risks". (Reducing AGI extinction risks is a very different matter, since most AGIs would colonize space and spread suffering into the galaxy, just like most human-controlled future civilizations would.) Regardless, negative utilitarians should just focus their sights on more clearly beneficial suffering-reduction projects, like promoting suffering-focused ethical viewpoints and researching more how best to reduce wild-animal and far-future suffering.

Contents

- Introduction

- Most catastrophic risks would not cause extinction

- Degree of compromise as a key metric

- How contingent is the future?

- War as a key risk

- Dislocation makes conflict more likely

- If it ain't broke, don't fix it

- How robust is technological civilization?

- Might humans be replaced by other species?

- Other costs to catastrophes

- Silver linings to catastrophes

- What if the conclusions flipped?

- Is work on catastrophic risks optimal?

- Recovery measures are not supported by this argument

- Appendix: Inoculation in general

- Footnotes

Introduction

Some in the effective-altruist community consider global catastrophic risks to be a pressing issue. Catastrophic risks include possibilities of world financial collapse, major pandemics, bioweapons, nanoweapons, environmental catastrophes like runaway global warming, and nuclear war.

Typically discussions of these risks center on massive harm to humanity in the short term and/or remote risks that they would lead to human extinction, affecting the long term. In this piece, I'll explore another consideration that might trump both short-term harm and extinction considerations: the flow-through effects of catastrophic risks on the degree of compromise in future politics and safety of future technology.

Most catastrophic risks would not cause extinction

I think the only known technological development that is highly likely to cause all-out human extinction is AGI.1 Carl Shulman has defended this view, although he notes that while he doesn't consider nanotech a big extinction risk, "Others disagree (Michael Vassar has worked with the [Center for Responsible Nanotechnology], and Eliezer [Yudkowsky] often names molecular nanotechnology as the [extinction ]risk he would move to focus on if he knew that AI was impossible)." Reinforcing this assessment was the "Global Catastrophic Risks Survey" of 2008, in which the cumulative risk of extinction was estimated as at most 19% (median), the highest two subcomponents being AI risk and nanotech risk at median 5% each. Nuclear war was median 1%, consistent with general expert sentiment.

Of course, there's model uncertainty at play. Many ecologists, for instance, feel the risk of human extinction due to environmental issues is far higher than what those in the techno-libertarian circles cited in the previous paragraph believe. Others fear peak oil or impending economic doom. Still others may hold religious or philosophical views that incline them to find extinction likely via alternate means. In any event, whether catastrophic risks are likely to cause extinction is not relevant to the remainder of this piece, which will merely examine what implications -- both negative and positive -- catastrophic risks might have for the trajectory of social evolution conditional on human survival.

Degree of compromise as a key metric

Ignoring extinction considerations, how else are catastrophic risks likely to matter for the future? Of course, they would obviously cause massive human damage in the short run. But they would also have implications for the long-term future of humanity to the extent that they affected the ways in which society developed: How much international cooperation is there? How humane are people's moral views? How competitive is the race to develop technology the fastest?

!['President Gerald Ford and Soviet General Secretary Leonid Brezhnev sign a Joint Communiqué following talks on the limitation of strategic offensive arms. The document was signed in the conference hall of the Okeansky Sanitarium, Vladivostok, USSR.' David Hume Kennerly [Public domain], via Wikimedia Commons: https://commons.wikimedia.org/wiki/File:Ford_signing_accord_with_Brehznev,_November_24,_1974.jpg](https://longtermrisk.org/files/Ford_signing_accord_with_Brehznev_November_24_1974-350x238.jpg) I think the extent of cooperation in the future is one of the most important factors to consider. A cautious, humane, and cooperative future has better prospects for building AGI in a way that avoids causing massive amounts of suffering to powerless creatures (e.g., suffering subroutines) than a future in which countries or factions race to build whatever AGI they can get to work so that they take over the world first. The AGI-race future could be significantly worse than the slow and cautious future in expectation, maybe 10%, 50%, 100%, or even 1000%. A cooperative future would definitely not be suffering-free and carries many risks, but the amount by which things could get much worse exceeds the amount by which they could get much better.

I think the extent of cooperation in the future is one of the most important factors to consider. A cautious, humane, and cooperative future has better prospects for building AGI in a way that avoids causing massive amounts of suffering to powerless creatures (e.g., suffering subroutines) than a future in which countries or factions race to build whatever AGI they can get to work so that they take over the world first. The AGI-race future could be significantly worse than the slow and cautious future in expectation, maybe 10%, 50%, 100%, or even 1000%. A cooperative future would definitely not be suffering-free and carries many risks, but the amount by which things could get much worse exceeds the amount by which they could get much better.

How contingent is the future?

Much of the trajectory of the future may be inexorable. Especially as people become smarter, we might expect that if compromise is a Pareto-improving outcome, our descendants should converge on it. Likewise, even if catastrophe sets humanity back to a much more primitive state, it may be that a relatively humane, non-violent culture will emerge once more as civilization matures. For example:

Acemoglu and Robinson’s Why Nations Fail [2012] is a grand history in the style of Diamond [1997] or McNeil [1963]. [...] Acemoglu and Robinson theorize that political institutions can be divided into two kinds - “extractive” institutions in which a “small” group of individuals do their best to exploit - in the sense of Marx - the rest of the population, and “inclusive” institutions in which “many” people are included in the process of governing hence the exploitation process is either attenuated or absent.

[...] inclusive institutions enable innovative energies to emerge and lead to continuing growth as exemplified by the Industrial Revolution. Extractive institutions can also deliver growth but only when the economy is distant from the technological frontier.

If this theory is right, it would suggest that more inclusive societies will in general tend to control humanity's long-run future.

Still, humanity's trajectory is not completely inevitable, and it can be sensitive to initial conditions.

- A society that has better institutions for cooperation may be able to achieve compromise solutions that would simply be unavailable to one in which those institutions did not exist, no matter how smart the people involved were.

- It may be that social values matter -- e.g., if people care more intrinsically about compromise, this can change the outcomes that result from pure game-theoretic calculations because the payoffs are different.

- Historical, cultural, and political conditions can influence Schelling points. Sometimes there are multiple possible Nash equilibria, and expectations determine which one is reached.

There's a long literature on the extent to which history is inevitable or contingent, but it seems that it's at least a little bit of both. Even in cases of modern states engaged in strategic calculations, there have been a great number of contingent factors. For example:

- As an analyst for US military policy, Thomas Schelling suggested strategies and safeguards that would not have been thought of without him. Roger Myerson said: "You know, I think there's some chance that Tom Schelling may have saved the world." At least as dramatic is Stanislav Petrov helping to avert accidental nuclear war.

- Confronting the Bomb: A Short History of the World Nuclear Disarmament Movement argues the case that anti-nuclear activism made some key differences in how governments conducted nuclear policy, including Ronald Reagan's flip in stance from very hawkish to doveish, in part due to the largest political demonstration in American history on 12 June 1982 against increasing nuclear arsenals. This is one example to demonstrate that political action can make a non-inevitable difference to even highly strategic matters of world dominance by major powers.

- In general, US presidents have sometimes had significant contingent effects on the nation's direction.

- There are countless other historical examples that could be cited.

Even if we think that greater intelligence by future people will mean less contingency in how events play out, we clearly won't eliminate contingency any time soon, and the decisions we make in the coming decades may matter a lot to the final outcome.

In general, if you think there's only an X% chance that the success or failure of compromise to avoid an AGI arms race is contingent on what we do, you can multiply the expected costs/benefits of our actions by X%. But probably X should not be too small. I would put it definitely above 33%, and a more realistic estimate should be higher.

War as a key risk

What factors are most likely to lead to an AGI arms race in which groups compete to build whatever crude AGI works rather than cautiously constructing an AGI that better encapsulates many value systems, including that of suffering reduction? If AGI is built by corporations, then fierce market competition could be risky. That said, it seems most plausible to me that AGI would be built by, or at least under the control of, governments, because unless the AGI project took off really quickly, it seems the military would not allow a national (indeed, world) security threat to proceed without restraint.

In this case, the natural scenario that could lead to a reckless race for AGI would be international competition -- say, between the US and China. AGI would be in many ways like nuclear weapons, because whoever builds it first can literally take over the world (though Carl Shulman points out some differences between AGI and nuclear weapons as well).

If we think historically about what has led to nuclear development, it has always been international conflict, usually precipitated by wars:

- World War II

- The US Manhattan Project, which required over 130,000 people and cost ~$26 billion dollars as measured in 2013 currency.

- The uncompleted Nazi nuclear project.

- Cold War

- Soviet Union, China, UK, France, etc.

- India-China conflict

- Led to India's development of nuclear weapons.

- India-Pakistan conflict

- Harsh treaty conditions following the Indo-Pakistani War of 1971 spurred Pakistan's nuclear-weapons program.

- Israeli conflicts with Middle Eastern neighbors

- Led to Israel's nuclear-weapons program.

In general, war tends to cause

- Fast technological development, often enhanced by major public investments

- Willingness to take risks in order to develop the technology first

- International hostility that makes cooperation difficult.

Thus, war seems to be a major risk factor for fast AGI development that results in less good and greater expected suffering than more careful, cooperative scenarios. To this extent, anything else that makes war more likely entails some expected harm through this pathway.

Dislocation makes conflict more likely

While catastrophic risks are unlikely to cause extinction, they are fairly likely to cause damage on a mass scale. For example, from the "Global Catastrophic Risks Survey":

| Catastrophe | Median probability of >1 million dead | Median probability of >1 billion dead | Median probability of extinction |

| nanotech weapons | 25% | 10% | 5% |

| all wars | 98% | 30% | 4% |

| biggest engineered pandemic | 30% | 10% | 2% |

| nuclear wars | 30% | 10% | 1% |

| natural pandemic | 60% | 5% | 0.05% |

...and so on. Depending on the risk, these disasters may or may not contribute appreciably to risks of AGI arms race, and it would be worth exploring in more detail which risks are most likely to lead to a breakdown of compromise. Still, in general, all of these risks seem likely to increase the chance of warfare, and by that route alone, they imply nonzero risks for increasing suffering in the far future.

If it ain't broke, don't fix it

For all its shortcomings, contemporary society is remarkably humane by historical standards and relative to what other possibilities one can imagine. This trend is unmistakable to anyone who reads history books and witnesses how often societies in times past were controlled by violent takeover, fear, and oppression. Steven Pinker's The Better Angels of Our Nature is a quantitative defense of this thesis. Pinker cites "six major trends" toward greater peace and cooperation that have taken place in the past few millennia:

- The Pacification Process: The beginnings of cities and governments

- The Civilizing Process: Smaller territories uniting into larger kingdoms

- The Humanitarian Revolution: Reductions in slavery, torture, corporal punishment, etc.

- The Long Peace: After World War II, the great powers have not directly fought each other

- The New Peace: A short-term (and hence "more tenuous") decline in conflicts since the end of the Cold War

- The Rights Revolutions: Greater moral consideration for women, minorities, homosexuals, animals, etc.

Catastrophic risks -- especially those like nuclear winter that could set civilization back to a much less developed state -- are essentially a "roll of the dice" on the initial conditions for how society develops, and while things could certainly be better, they could also be a lot worse.

To a large extent, it may be that the relatively peaceful conditions of the present day are a required condition for technological progress, such that any advanced civilization will necessarily have those attributes. Insofar as this is true, it reduces the expected cost of catastrophic risks. But there's some chance that these peaceful conditions are not inevitable. One could imagine, for instance, a technologically advanced dictatorship taking control instead, and insofar as its policies would be less determined by those of its population, the degree of compromise with many value systems would be reduced in expectation. Consider ancient Sparta, monarchies, totalitarian states, the mafia, gangs, and many other effective forms of government where ruthlessness by leaders is more prevalent than compassion.

Imagine what would have happened if Hitler's atomic-bomb project had succeeded before the Manhattan Project. Or if the US South had won the American Civil War. Or various other scenarios. Plausibly the modern world would have turned out somewhat similar to the present (for instance, I doubt slavery would have lasted forever even if the US South had won the Civil War), but conditions probably would have been somewhat worse than they are now. (Of course, various events in history also could have turned out better than they actually did.)

A Cold War scenario seems likely to accelerate AGI relative to its current pace. Compare with the explosion of STEM education as a result of the Space Race.

How robust is technological civilization?

Civilization in general seems to me fairly robust. The world witnessed civilizations emerge independently all over the globe -- from Egypt to the Fertile Crescent to China to the Americas. The Mayan civilization was completely isolated from happenings in Africa and Asia and yet shared many of the same achievements. It's true that civilizations often collapse, but they just as often rebuild themselves. The history of ancient Egypt, China, and many other regions of the world is a history of an empire followed by its collapse followed by the emergence of another empire.

It's less clear whether industrial civilization is robust. One reason is that we haven't seen completely independent industrial revolutions in history, since trade was well developed by the time industrialization could take place. Still, for example, China and Europe were both on the verge of industrial revolutions in the early 1800s2, and the two civilizations were pretty independent, despite some trade. China and Europe invented the printing press independently.3 And so on.

Given the written knowledge we've accumulated, it's not plausible that post-disaster peoples would not relearn how to build industry. But it's not clear whether they would have the resources and/or social organization requisite to do so. Consider how long it takes for developing nations to industrialize even with relative global stability and trade. On the other hand, military conflicts if nothing else would probably force post-disaster human societies to improve their technological capacities at some point. Technology seems more inevitable than democracy, because technology is compelled by conflict dynamics. Present-day China is an example of a successful, technologically advanced non-democracy. (Of course, China certainly exhibits some degree of deference to popular pressure, and conversely, Western "democracies" also give excessive influence to wealthy elites.)

Some suggest that rebuilding industrial civilization might be impossible the second time around because abundant surface minerals and easy-to-drill fossil fuels would have been used up. Others contend that human ingenuity would find alternate ways to get civilization off the ground, especially given the plenitude of scientific records that would remain. I incline toward the latter of these positions, but I maintain modesty on this question. It reflects a more general divide between scarcity doomsayers vs. techno-optimists. (The "optimist" in "techno-optimist" is relative to the goal of human economic growth, not necessarily reducing suffering.)

Robin Hanson takes the techno-optimist view:

Once [post-collapse humans] could communicate to share innovations and grow at the rate that our farming ancestors grew, humanity should return to our population and productivity level within twenty thousand years. (The fact that we have used up some natural resources this time around would probably matter little, as growth rates do not seem to depend much on natural resource availability.)

But even if historical growth rates didn't depend much on resources, might there be some minimum resource threshold below which resources do become essential? Indeed, in the limit of zero resources, growth is not possible.

A blog post by Carl Shulman includes a section titled "Could a vastly reduced population eventually recover from nuclear war?". It reviews reasons why rebuilding civilization would be harder and reasons it would be easier the second time around. Shulman concludes: "I would currently guess that the risk of permanent drastic curtailment of human potential from failure to recover, conditional on nuclear war causing the deaths of the overwhelming majority of humanity, is on the lower end." Shulman also seems to agree with the (tentative and uncertain) main thrust of my current article: "Trajectory change" effects of civilizational setback, possibly including diminution of liberal values, "could have a comparable or greater role in long-run impacts" of nuclear war (and other catastrophic risks) than outright extinction.

Stuart Armstrong also agrees that rebuilding following nuclear war seems likely. He points out that formation of governments is common in history, and social chaos is rare. There would be many smart, technically competent survivors of a nuclear disaster, e.g., in submarines.

Might humans be replaced by other species?

As noted above, full-out human extinction from catastrophic risks seems relatively unlikely compared with just social destabilization. If human extinction did occur from causes other than AI, presumably parts of the biosphere would still remain. In many scenarios, at least some other animals would survive. What's the probability that those animals would then replace humans and colonize space? My guess is it's small but maybe not negligibly so. Robin Hanson seems to agree: "it is also possible that without humans within a few million years some other mammal species on Earth would evolve to produce" a technological civilization.

In the history of life on Earth, boney fish and insects emerged around 400 million years ago (mya). Dinosaurs emerged around 250 mya. Mammals blossomed less than 100 mya. Earth's future allows for about 1000 million years of life to come. So even if, as the cliche goes, the most complex life remaining after nuclear winter was cockroaches, there would still be 1000 million years in which human-like intelligence might re-evolve, and it took just 400 million years the first time around starting from insect-level intelligence. Of course, it's unclear how improbable the development of human-like intelligence was. For instance, if the dinosaurs hadn't been killed by an asteroid, plausibly they would still rule the Earth, without any advanced civilization.4 The Fermi paradox also has something to say about how likely we should assess the evolution of advanced intelligence from ordinary animal life to be.

Some extinction scenarios would involve killing all humans but leaving higher animals. Perhaps a bio-engineered pathogen or nanotech weapon could do this. In that case, re-emergence of intelligence would be even more likely. For example, cetaceans made large strides in intelligence 35 mya, jumping from an encephalization quotient (EQ) of 0.5 to 2.1. Some went on to develop EQs of 4-5, which is close to the human EQ of 7. As quoted in "Intelligence Gathering: The Study of How the Brain Evolves Offers Insight Into the Mind," Lori Marino explains:

Cetaceans and primates are not closely related at all, but both have similar behavior capacities and large brains -- the largest on the planet. Cognitive convergence seems to be the bottom line.

One hypothesis for why humans have such large brains despite metabolic cost is that big brains resulted from an arms race of social competition. Similar conditions could obtain for cetaceans or other social mammals. Of course, many of the other features of primates that may have given rise to civilization are not present in most other mammals. In particular, it seems hard to imagine developing written records underwater.

If another species took over and built a space-faring civilization, would it be better or worse than our own? There's some chance it could be more compassionate, such as if bonobos took our place. But it might also be much less compassionate, such as if chimpanzees had won the evolutionary race, not to mention killer whales. On balance it's plausible our hypothetical replacements would be less compassionate, because compassion is something humans value a lot, while a random other species probably values something else more. The reason I'm asking this question in the first place is because humans are outliers in their degree of compassion. Still, in social animals, various norms of fair play are likely to emerge regardless of how intrinsically caring the species is. Simon Knutsson pointed out to me that if human survivors do recover from a near-extinction-level catastrophe, or if humans go extinct and another species with potential to colonize space evolves, they'll likely need to be able to cooperate rather than fighting endlessly if they are to succeed in colonizing space. This suggests that if they colonize space, they will be more moral or peaceful than we were. My reply is that while this is possible, a rebuilding civilization or new species might curb infighting via authoritarian power structures or strong ingroup loyalty that doesn't extend to outgroups, which might imply less compassion than present-day humans have.

My naive guess is that it's relatively unlikely another species would colonize space if humans went extinct -- maybe a ~10% chance? I suspect that most of the Great Filter is behind us, and some of those filter steps would have to be crossed again for a new non-human civilization to emerge. As long as that new civilization wouldn't be more than several times worse in expectation than our current civilization, then this scenario is unlikely to dominate our calculations.

Other costs to catastrophes

Greater desperation

In general, people in more hardscrabble or fearful conditions have less energy and emotional resources to concern themselves with the suffering of others, especially with powerless computations that might be run by a future spacefaring civilization. Fewer catastrophes means more people who can focus on averting suffering by other sentients.

Darwinian futures?

Current long-term political trends suggest that a world government may develop at some point, as is hinted by the increasing degrees of unity among rich countries (European Union, international trade agreements, etc.). A world government would offer greater possibilities for enforcing mutually beneficial cooperation and thereby fulfilling more of what all value systems want in expectation, relative to unleashing a Darwinian future.

Silver linings to catastrophes

In this section I suggest some possible upsides of catastrophic risks. I think it's important not to shy from these ideas merely because they don't comport with our intuitive reactions. Arguments should not be soldiers. At the same time, it's also essential to constrain our speculations in this area by common sense.

Also note that even in the unlikely event that we concluded catastrophic risks were net positive for the far future, we should still not support them, to avoid stepping on the toes of so many other people who care deeply about preventing short-term harm. Rather, in this hypothetical scenario, we should find other, win-win ways to improve the future that don't encroach on what so many other people value.

Greater concern for suffering?

Is it possible that some amount of disruption in the near term could heighten concern about potential future sources of suffering, whereas if things go along smoothly, people will give less thought to futures full of suffering? This question lies in analogy with the concern that reducing hardship and depression might make people less attuned to the pain of others. Many of the people I know who care most about reducing suffering have gone through severe personal trauma or depression at one point. When things are going well, you can forget how horrifying suffering can be.

It's often said that World War I transformed art and cultural attitudes more generally. Johnson (2012): "During and after World War I, flowery Victorian language was blown apart and replaced by more sinewy and R-rated prose styles. [...] 'World War I definitely gives a push forward to the idea of dystopia rather than utopia, to the idea that the world is going to get worse rather than better,' Braudy said."

More time for reflection?

Severe catastrophes might depress economic output and technological development, with the possibility of allowing more time for reflection on the risks that such technology would bring. That said, this cuts both ways: Faster technology also allows for faster wisdom, better ability to monitor tech developments, and greater prosperity that allows more people to even think about these questions, as well as reduced social animosity and greater positive-sum thinking. The net sign of all of this is very unclear.

Resource curse?

There are suggestions (hotly debated) in the political-science literature of a "resource curse" in which greater reserves of oil and other natural resources may contribute to authoritarianism and repression. The Wikipedia article cites a number of mechanisms by which the curse may operate. A related trend is the observation that cooler climates sometimes have a greater degree of compassion and cooperation -- perhaps because to survive cold winters you have to work together, while in warm climates, success is determined by being the best at forcibly stealing the resources that already exist?

To the extent these trends are valid, does this suggest that if humanity were to rebuild after a significant catastrophe, it might be more democratic owing to having less oil, metals, and other resources?

The "Criticisms" section of the Wikipedia article explains that some studies attribute causation in the other direction: Greater authoritarianism leads countries to exploit their resources faster. Indeed, some studies even find a "resource blessing."

Greater impetus for cooperation?

Fighting factions are often brought together when they face a common enemy. For instance, in the 1954 Robbers Cave study, the two hostile factions of campers were brought together by "superordinate goals" that required them to unite to solve a problem they all faced. Catastrophic risks are a common enemy of humanity, so could efforts to prevent them build cooperative institutions? For instance, cooperation to solve climate change could be seen as an easy test bed for the much harder challenges that will confront humanity in cooperating on AGI. Could greater climate danger provide greater impetus for building better cooperative institutions early on? Wolf Bullmann compared this to vaccination.

Of course, this is not an argument against building international-cooperation efforts against climate change -- those are the very things we want to happen. But it would be a slight counter-consideration against, say, personally trying to reduce greenhouse-gas emissions. I hasten to explain that this "silver lining" point is extremely speculative, and on balance, it seems most plausible that personally reducing greenhouse-gas emissions is net good overall, in terms of reducing risks of wars that could degrade into bad outcomes. The "inoculation" idea (see the Appendix) should be explored further, though it needs to be cast in a way that's not amenable to being quoted out of context.

Minority views in defense of alternate political systems

Reshuffling world political conditions could produce a better outcome, and indeed, there are minority views that greater authoritarianism could actually improve future prospects by reducing coordination problems and enforcing safeguards. We should continue to explore a broad range of viewpoints on these topics, while at the same time not wandering too easily from mainstream consensus.

What if the conclusions flipped?

Like any empirical question, the net impact of catastrophic risks on the degree of compromise in the future isn't certain, and a hypothetical scenario in which we concluded that catastrophic risks would actually improve compromise is not impossible. Not being concerned about catastrophic risks is more likely for pure negative utilitarians who also fear the risks of astronomical suffering that space colonization would entail. I find it plausible that the detrimental effects of catastrophic risks on compromise outweigh effects on probability of colonization, but this conclusion is contingent and not inevitable. What if the calculation flipped around?

Even if so, we should still probably oppose catastrophic risks when it's very cheap to do so, and we should never support them. Why? Because many other people care a lot about preventing short-term disasters, and stepping on so many toes so dramatically would not be an efficient course of action, much less a wise move by any reasonable heuristics about how to get along in society. Rather, we should find other, win-win approaches to improving compromise prospects that everyone can get behind. In any event, even ignoring the importance of cooperating with other people, it seems unlikely that focusing on catastrophic risks would be the best leverage point for accomplishing one's goals.

Is work on catastrophic risks optimal?

My guess is that there are better projects for altruists to pursue, because

- Working directly on improving cooperation scenarios for AGI seems to target the problem more head-on, and at the same time, this field has less funding because it's more fringe and has fewer immediately visible repercussions and less historical precedent that tend to motivate mainstream philanthropists, and

- Working directly on improving worldwide cooperation, global governance, etc. in general also seem more promising insofar as these efforts are, in my mind, more clearly positive with fewer question marks and shorter causal chains to the ultimate goal. If catastrophic risks were clearly being neglected relative to general compromise work, then the calculation might change, but as things stand, I would prefer to push on compromise directly.

Recovery measures are not supported by this argument

The argument in this essay applies only to preventing risks before they happen, so as to reduce societal dislocation. It doesn't endorse measures to ensure human recovery after catastrophic risks have already happened, such as disaster shelters or space colonies. These post-disaster measures don't avert the increased anarchy and confusion that would result from catastrophes but do help humans stick around to potentially cause cosmic harm down the road. Moreover, disaster-recovery solutions might even increase the chance that catastrophic risks occur because of moral hazard. I probably don't endorse post-disaster recovery efforts except maybe in rare cases when they also substantially help to maintain social stability in scenarios that cause less-than-extinction-level damage.

Appendix: Inoculation in general

Inoculation

The idea of inoculation -- accepting some short-term harm in order to improve long-term outcomes -- is a general concept.

Even with warfare, there's some argument about an inoculation effect. For example, the United Nations was formed after World War II in an effort to prevent similar conflicts from happening again. And there's a widespread debate about inoculation in activism. Sometimes the "radicals" fear that if the "moderates" compromise too soon, the partial concessions will quell discontent and prevent a more revolutionary change. For example, some animal advocates say that if we improve the welfare of farm animals, people will have less incentive to completely "end animal exploitation" by going vegan. In this case, the radicals claim that greater short-term suffering is the inoculation necessary to prevent long-term "exploitation."

Slippery slopes

The flip side to inoculation is the slippery slope: A little bit of something in the short term tends to imply more of it in the long term. In general, I think slippery-slope arguments are stronger than inoculation arguments, with some exceptions like in organisms' immune systems.

Usually wars cause more wars. World War II would not have happened absent World War I. Conflicts breed animosity and perpetuate a cycle of violence as tit-for-tat retributions continue indefinitely, with each side claiming the other side was the first aggressor. We see this in terrorism vs. counter-terrorism response and in many other domains.

Likewise, a few animal-welfare reforms now can enhance a culture of caring about animals that eventually leads to greater empathy for them. The Humane Society of the United States (HSUS) is often condemned by more purist animal-rights advocates as being in the hands of Big Ag, but in fact, Big Ag has a whole website devoted to trying to discredit HSUS. This is hardly behavior that one would expect if HSUS is actually helping ensure Big Ag's long-term future. ↩

Footnotes

- Note that even if AGI causes human extinction, it would likely still undertake space colonization to advance whatever value it wanted to maximize. (back)

- See When China Rules the World, Ch. 2. (back)

- "Gutenberg and the history of the printing press": "Gutenberg was unaware of the Chinese and Korean printing methods." (back)

- John Maxwell disputes this claim. My reasoning is that dinosaurs lasted for at least 135 million years but only went extinct 66 mya. It's easy to imagine that dinosaurs might have lasted, say, twice as long as they did, in which case they would still rule the Earth today. (back)